What is data wrangling?

Data wrangling, also referred to as data munging, is the process of transforming raw data from varied sources into structured, clean, complete, and validated data sets that are suitable for the kind of advanced analytics driving many of today’s big data initiatives. Data wrangling transforms the raw data into higher quality data, which in turn makes it possible for organizations to derive the maximum value from their analytics projects. This is especially true for projects that rely on machine learning, deep learning, or other forms of artificial intelligence.

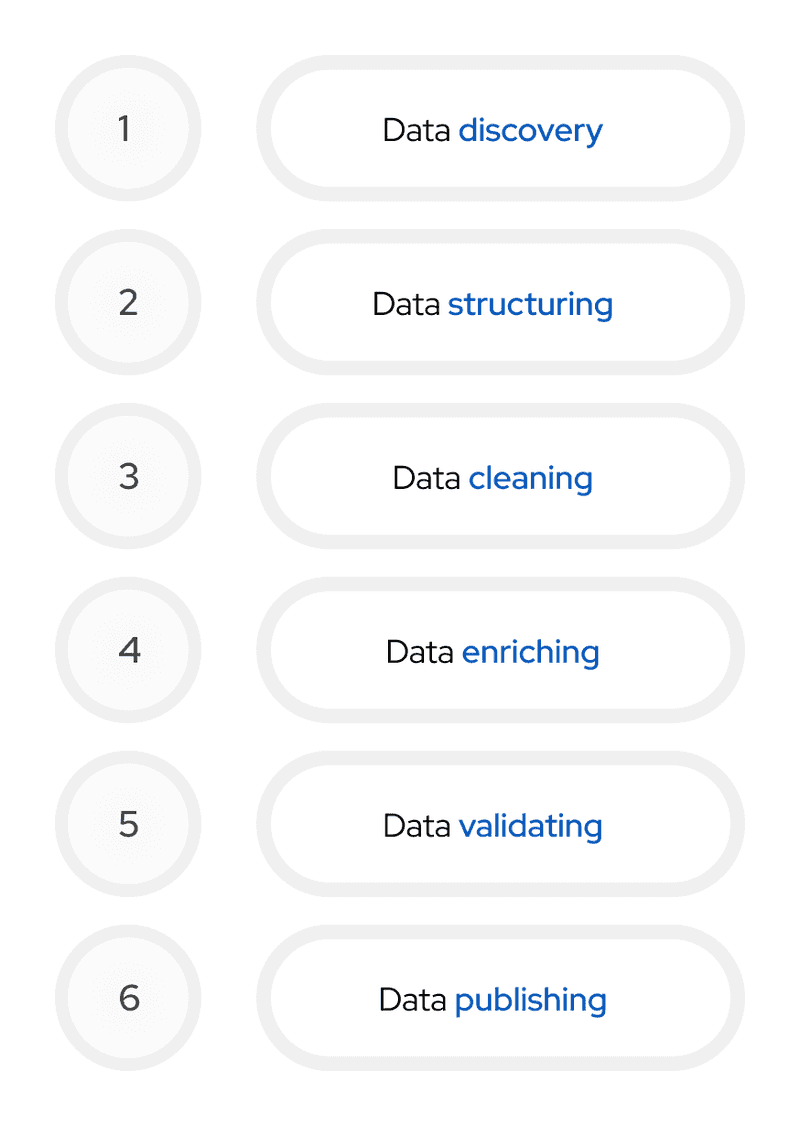

Data wrangling is an iterative process that includes multiple steps for turning raw data into accurate and valid information. Although data engineers approach the wrangling process in different ways, they often break it down into the following six steps:

-

Data discovery. During this phase, data engineers should identify the potential sources of raw data, determine which of that data is available and viable, and then try to make as much sense of it as possible. The data might include spreadsheets, log files, social media feeds, databases, text files, or an assortment of other types. Data engineers should know how much data they have and the shape of that data. They should also assess its quality, characteristics, and usefulness in answering business questions and delivering value.

-

Data structuring. After they fully understand the data, data engineers should restructure and organize the data to meet the requirements of their analytics projects. Raw data comes in a variety of shapes and sizes, and that data needs to be made usable for the analysis. Data structuring might include steps such as converting units, pivoting tables, transforming unstructured data into structured data, or doing whatever else it takes to standardize the data to support these projects.

-

Data cleaning. Along with structuring the data and refining its format, data engineers must thoroughly clean the data to ensure that it does not distort the analytics. As part of this process, data engineers need to remove duplicates, address outliers, fix errors, delete bad data, supply missing values, address inconsistencies, and take whatever other steps necessary to ensure that the data is accurate and usable.

-

Data enriching. In this phase, data engineers should assess the cleansed data to determine whether it contains enough information to properly carry out the intended analytics. If the data is lacking, the engineers will need to enhance it with additional data that provides more context or clarity. For example, data engineers might want to enhance their data with information from the organization’s internal customer relationship management (CRM) system or from a data broker service that tracks customer purchase histories.

-

Data validating. After data engineers get their data into a structured, cleansed, and complete state, they need to validate the data to ensure that it’s accurate, consistent, and free from errors. This step typically requires the use of programming and third-party tools to develop the scripts and queries necessary to automate the validation process. To this end, data engineers commonly take a test-driven approach to validation that is carefully documented and repeatable.

-

Data publishing. The final stage in the data wrangling process is to make the transformed data available to the people who need it. The exact approach to publishing the data will depend on the supported projects, but any stakeholders who need access to that data should be able to use it in its clean form as efficiently as possible. From this data, they should be able to perform their analyses, run AI applications, generate reports, create visualizations, or use the data in other ways that help them gain insights and make business decisions.

Data wrangling is one of the most important phases in any big data project, but it is not the only phase. It is part of a larger effort that establishes a data pipeline between the data sources and applications that consume the data. For example, data needs to be collected from the sources before it can be wrangled and then ingested by the applications after it has been transformed. Each organization will approach their big data projects in different ways and use different tools to implement them, but whatever their strategies, many will benefit by incorporating data wrangling into their efforts.

The importance of data wrangling

For most big data initiatives, data wrangling plays a pivotal role in ensuring that the data is properly prepared for the analytical process. As data grows more diverse, complex, and greater in scale, so does the need for a robust strategy that provides reliable and accurate data. Data wrangling makes it possible to transform raw data into a format suited to the most stringent demands of analytics and AI.

The importance of data wrangling to big data analytics cannot be overstated. Without it, a project is destined to deliver substandard results or fail altogether. For example, data wrangling can play a critical role in training AI workloads. If the data is not properly prepared, data scientists could end up with inefficient or inadequate data models, leading to results that cannot be fully trusted. Only with accurate and complete training data can data scientists hope to produce reliable models that lead to better predictions. In fact, any big data project can benefit from properly prepared data sets:

-

Improved data quality. One of the most important benefits of data wrangling is that it results in higher quality data, including the metadata. Data wrangling makes it possible to handle all types of data (structured, semi-structured, and unstructured), as well as data pulled from multiple sources. After data has been properly transformed, it is more accurate, contains fewer errors, and is more consistent. Wrangling also reduces duplicate data and, in some cases, provides missing values. In general, an effective wrangling strategy can lead to data that is more precise, complete, and reliable, making it better suited for advanced analytics and AI.

-

Better decision-making. The improved data quality means that stakeholders can make faster and more informed decisions. The transformed data is reliable, trustworthy, and provides a more accurate picture of the organization’s business, compared to what raw data can offer. Data wrangling can also make it possible to perform real-time or near real-time analytics, which enables decision-makers to respond more quickly to unexpected events or emerging trends. In addition, the integrated and cleansed data can lead to new and more valuable insights that might have otherwise been missed.

-

Increased productivity. Data wrangling makes it easier, quicker and more efficient to work with large volumes of data from disparate sources, especially as more of the wrangling process is automated. Not only does this save time when integrating and transforming data, but also when examining and analyzing the data because it is now so much cleaner. This results in greater efficiency and productivity for those analyzing the data, as well as for those relying on the analytics. At the same time, data engineers are freed up from more manual, time-consuming tasks so they can focus on efforts that deliver a higher value. Data that is cleansed, structured and consistent can also improve collaboration and data sharing.

-

Reduced costs and increased revenue. An effective data wrangling strategy has the potential to reduce costs and increase revenue. The more streamlined and automated the wrangling process, the more cost-effective it becomes to transform the data over the long-term. In addition, analysts spend less time on manual data management tasks and more time on the analytics. The cleaner data also leads to more effective and faster analytics, resulting in deeper insights into the data and the ability to act on that data more quickly, both of which can translate to increased revenue. Wrangling can also enhance collaboration, providing further opportunities for cutting costs and generating revenue.

Despite these benefits, data wrangling is not without its challenges. Contending with such large amounts of raw data—which is often inconsistent, inaccurate, or in other ways inadequate—can be a difficult, resource-intensive process, especially when integrating data from multiple sources. As the data volumes grow, so do these challenges. At the same time, organizations are under increasing pressure to protect privacy and comply with applicable regulations, making it even more difficult to contend with the growing volumes of data.

Data engineers can help mitigate these challenges by thoroughly understanding the source data and automating the wrangling processes wherever possible. As part of this effort, they should make use of tools and technologies that can enhance and streamline their operations. They should also carefully document their procedures and data transformations, which can help streamline their operations, as well as improve transparency and foster collaboration.

Until recently, data wrangling has primarily been a series of manual steps, often representing a significant portion of a project’s efforts. However, there has been slow but steady progress into automating at least some of these steps, often with the help of machine learning and other AI technologies. Such technologies can automatically help identify issues with the raw data, correct those issues, and apply structure to the entire data set, bringing intelligence to the data transformation process.

Data wrangling and big data

Data volumes are not going to get smaller or less complex any time soon, nor are organizations likely to give up on their big data initiatives. If anything, data volumes will continue to grow at an exponential rate, the data will become increasingly diverse, and organizations will keep on looking for ways to extract value from the data, often using advanced analytics to gain deeper insights and make more effective decisions.

By adopting a robust data wrangling strategy, organizations can improve the quality of their data, which in turn could help drive better decision-making and productivity, as well as reduce operating costs and increase revenues. It might not be easy or simple to implement such a strategy, but if done correctly, it could lead to improved analytics with more valuable outcomes, making the effort well worth the time and investment.