Privacy in the Age of AI

The world is generating more data than ever, much of which is shared online. AI systems love all this data, gobbling it up from wherever they can, even if it contains personal information. Data might be scraped from any number of public sources, collected as part of a company’s private data, or pulled directly from users’ input.

The amount of personal data that AI systems now consume is growing at a staggering rate, yet users often have little control over what happens with that data. At the same time, many AI systems do not have adequate safeguards in place to protect the data from cyberthreats or to ensure their systems are bias-free. Vendors also lack transparency into how their algorithms work, how they use the data, or how long they keep it around.

In many of today’s AI systems, private data can be easily misused or compromised, leading to identity theft, fake images, false information, or other abuses. As AI systems process data and identify patterns, they might draw biased conclusions or infer personal information. If this inference is combined with discriminatory analytics, it can result in decisions being made about individuals based on perceived characteristics or beliefs, rather than actual facts.

In recent years, there has been an escalating concern about data privacy, despite the many benefits that AI promises. Some of this concern is reflected in the growing call for legislation that addresses AI head on. Although there are a variety of regulations now in place to protect privacy, such as the European Union’s General Data Protection Regulation (GDPR) or the California Consumer Privacy Act (CCPA) in the US, some believe that they don’t fully address AI concerns.

For this reason, additional laws are being put forth to better target AI. The European Commission, for example, has proposed the Artificial Intelligence Act to address the risks and challenges that come with AI, and California recently passed the California Privacy Rights Act (CPRA), which amends the CCPA to give consumers more rights to opt out of automated decision-making technologies such as AI.

Other US states are also following suit, as are many other countries. Even the White House has gotten into the act with its Blueprint for an AI Bill of Rights, along with an executive order that combats algorithmic discrimination.

Emerging from these trends is a call for responsible AI, which promotes the goal of using AI in a way that is safe, trustworthy, transparent, fair, ethical, explainable, and free from bias. Responsible AI protects user privacy and supports the ability for consumers to make informed decisions about their personal information. Many organizations have come to recognize the value of such an approach and are looking for ways to be more responsible in their own AI initiatives.

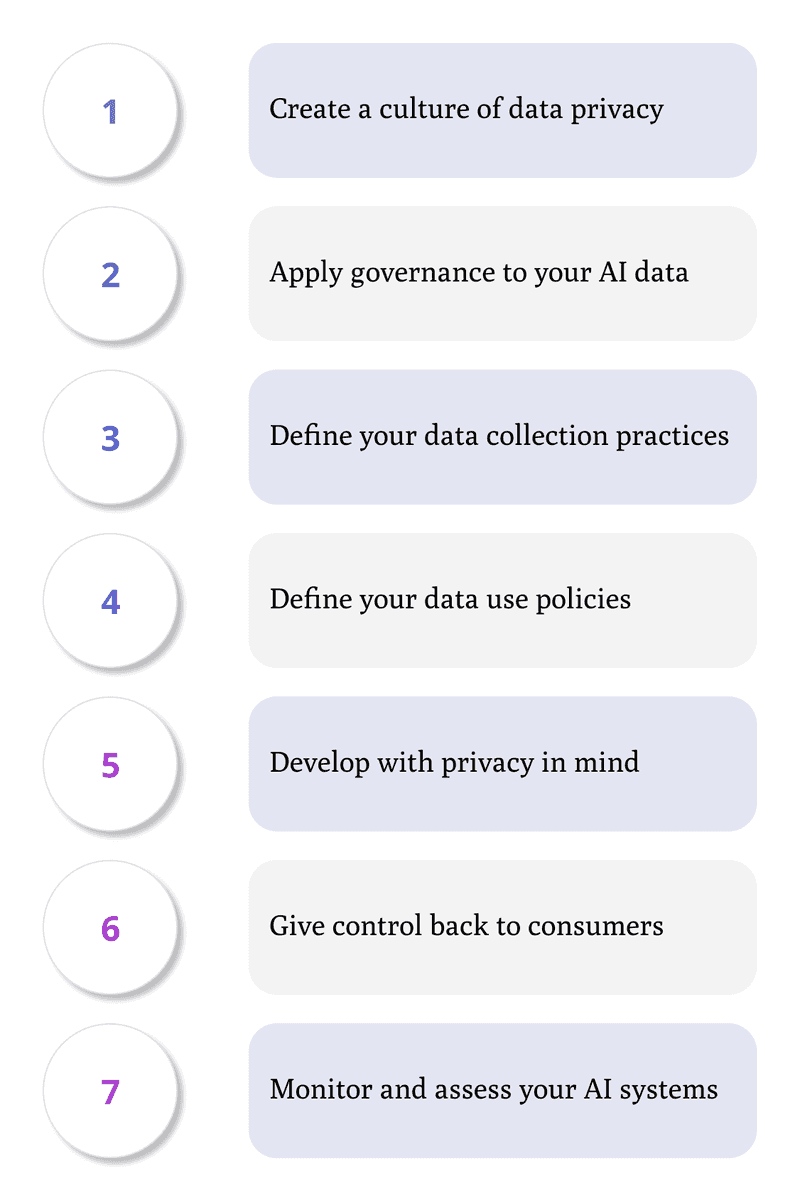

7 Ways to Protect User Privacy in AI

Organizations that hope to achieve responsible AI must confront the issue of privacy head on. To help with this process, we’ve provided seven guidelines for addressing privacy in AI initiatives. This is by no means an exhaustive list, nor will each organization approach privacy in the same way. But these guidelines can provide a good starting point for learning ways to protect personal information and deliver responsible AI.

1. Create a culture of data privacy

Your organization should strive to create an environment that prioritizes data privacy in all your AI designs. You should have a clear vision of what you’re trying to achieve with your AI initiatives and what it will take to reach those goals in a way that protects personal information. This is also a good time to identify the stakeholders responsible for developing, deploying, and managing your AI solutions and to establish a system of accountability that holds them to your organization’s standards.

Another important step is to assess your AI designs for privacy risks and bias. Some organizations establish an ethical review process to ensure that their AI initiatives align with their privacy goals. Those participating in your AI projects should be trained in how to prioritize and maintain privacy and be encouraged to stay current in the regulatory landscape.

2. Apply governance to your AI data

Your governance framework needs to incorporate your AI data, if it doesn’t already. A governance strategy should ensure that your AI initiatives meet security and privacy requirements and adhere to applicable regulatory standards. You might need to assign additional resources to this effort so they can properly evaluate your current systems and incorporate what could potentially be a vast amount of AI data.

A solid governance framework protects sensitive AI data from internal and external threats and helps to meet compliance requirements. The framework maps out how data should be catalogued, processed, stored, retained, and protected (e.g., encryption, firewalls, access controls, intrusion detection, etc.). Many organizations adopt industry standards such as the Cybersecurity Framework from the U.S. National Institute of Standards and Technology (NIST). Cloud vendors also provide metrics and tools that can help companies manage compliance risks for data protection and governance, such as Microsoft Purview Compliance Manager.

3. Define your data collection practices

Your data collection strategy can play a critical role in achieving your privacy goals. You need to clearly define your collection practices so there are no misunderstandings about where the data is coming from or how it’s collected. Your approach to data collection should be transparent, fair, and comply with applicable regulations. Where appropriate, consumers should be told why you’re collecting certain types of data and be able to consent to its use.

You should collect only the type and amount of personal data that is necessary to support your AI initiatives without violating privacy rights. Although this seems to contradict the basic precepts of AI, which thrives on data consumption, careless collection practices can put your organization at risk for privacy violations. Your goal should be to protect personal information and autonomy at all times. If you do collect personal data, you should consider steps such as anonymization, de-identification, differential privacy, and data aggregation.

4. Define your data use policies

Your data use policies should clearly define how private data can be used in your AI initiatives. For example, a medical facility might need to collect an extensive amount of personal information, but using any of that data for AI must be carefully controlled to avoid violating patient privacy. Any use of personal data must be transparent, fair, and ethical.

Those responsible for delivering an AI solution should know exactly what data they can use as well as how and when they can use it. Your organization must put into place the guidelines and controls necessary to prevent both intentional and unintentional data misuse. Some organizations employ protection strategies such as data enclaves (to segregate the data) or federated learning (to decentralize the training data). Personal data that is collected for one purpose should not be used for another purpose, unless the individual has consented to its use.

5. Develop with privacy in mind

Development teams play a crucial role in protecting privacy. By incorporating privacy-by-design principles into their development efforts, they can build AI systems that protect personal information and adhere to ethical principles. Team members should be educated and trained in the risks that their designs might pose to security, privacy, and compliance and be kept up to date with emerging trends and technologies, such as leveraging blockchain to decentralize AI.

Development teams should follow their usual best practices when building AI systems. For example, they should protect source files, conduct risk and impact assessments, perform code reviews, document solution designs, and extensively test their designs. They should also verify the accuracy of the data they’re working with and ensure that training and testing data is representative. Developers should strive to remove any forms of bias from their algorithms and data sets, always working toward the goal of fairness, while minimizing the use of personal data wherever possible.

6. Give control back to consumers

Responsible AI ensures that consumers have control over their personal data. They should be able to understand how it is being used and have the option to prevent its use. They should also be informed if AI is being used to make decisions about them. Your organization needs to be fully transparent about your data collection and use practices so consumers can make informed decisions about their level of participation.

Some privacy laws give consumers the right to see what data has been collected and to remove or correct that data. The laws might also specify that private data cannot be used without the user’s consent. Even if you operate in a region that does not impose such restrictions, consumers are growing increasingly concerned about privacy and demanding greater control. It’s just a matter of time before legislation catches up. The greater controls you can provide consumers now, the better your organization’s reputation and the more prepared you’ll be for future restrictions.

7. Monitor and assess your AI systems

Your commitment to privacy does not stop after you’ve deployed your AI solution. You should continuously monitor and audit the system and its data output. It can also be useful to track how users interface with the system and to determine data access and usage patterns. As part of this process, you should identify metrics that help you discover biases, errors, false positives, and other issues. You should also establish a dedicated incident response team to handle potential and real issues as they occur.

Regulations often require certain types of auditing, but even if they don’t, you should still evaluate your AI system for bias and privacy risks. You should also perform impact assessments to determine how your solution might affect certain demographic groups and then update your model and training data accordingly. Also be sure to keep your system’s supporting firmware and software up to date to maximize security.

Responsible AI and User Privacy

Organizations that are moving toward responsible AI must make a concerted effort to prioritize privacy in their AI initiatives. That said, many of the steps necessary to achieve this goal are simply commonsense best practices that should be part of any development effort, such as good data governance and use policies. The key is to give careful consideration to privacy at each step of the way. However, this can’t happen without building a culture of privacy within your organization. Responsible AI also requires the full support of executives, management, decision-makers, and other key players—people who recognize the importance of privacy and are willing to invest the time and resources necessary to make it a priority.